AI Governance Intake Prioritization Workflow

Artificial Intelligence, or AI, is a type of technology that helps computers do smart tasks. Many companies use AI to answer questions, organize information, and make work faster.

But even though AI can be very helpful, it can also cause problems if no one checks it properly. Sometimes AI can make wrong choices or use information in an unsafe way.

To prevent these problems, companies follow a clear step-by-step system before they use any new AI idea. This system is called an AI governance intake prioritization workflow.

It may sound like a long phrase, but the idea is simple: it is a process that helps companies collect AI ideas, check them for safety, and decide which ones should be approved first.

This workflow makes sure that every AI project is safe, fair, and useful. It also helps companies avoid mistakes and protect people’s information.

In this article, you’ll learn what this workflow is, why it matters, and how it works in the simplest way possible.

What Is AI Governance?

AI governance is a set of rules and steps that guides how a company should use Artificial Intelligence (AI). It makes sure AI is safe, fair, and helpful for people. You can think of it like the safety instructions for using a powerful tool. Without instructions, things can go wrong. With the right rules, everything works smoothly.

AI governance helps companies check every AI idea before using it. They make sure the AI does not share private information, treat people unfairly, or make harmful mistakes. They also make sure the AI follows all the laws about data and technology.

This system also helps companies understand how their AI works. They need to know what the AI is doing and why it is making certain decisions. This builds trust and prevents confusion.

In simple words, AI governance is the way companies make sure AI is used responsibly, safely, and in a way that protects everyone.

Read More: Stop Believing These Common AI Myths

What Is an AI Intake Workflow?

An AI intake workflow is a simple step-by-step process a company uses to collect and check new AI ideas. It helps the company understand what the idea is, how it works, and if it is safe to use. You can think of it like turning in a school assignment — you submit your work, and someone reviews it before accepting it.

When someone in a company wants to use a new AI tool, they fill out a short form. This form usually asks things like:

- What the AI will do

- What problem it will solve

- What information it needs

- Who will use it

- How it will help the company

After the idea is submitted, the workflow helps the company review it properly. This process makes sure the AI idea:

- Is safe for people

- Uses information correctly

- Does not break any rules

- Does not harm or confuse users

- Is actually useful and needed

The AI intake workflow keeps everything organized so the company doesn’t rush into using an unsafe or confusing AI tool. It also makes sure every idea is checked fairly and clearly before moving forward.

In simple words, an AI intake workflow helps companies collect good AI ideas, understand them better, and choose only the safest and most helpful ones

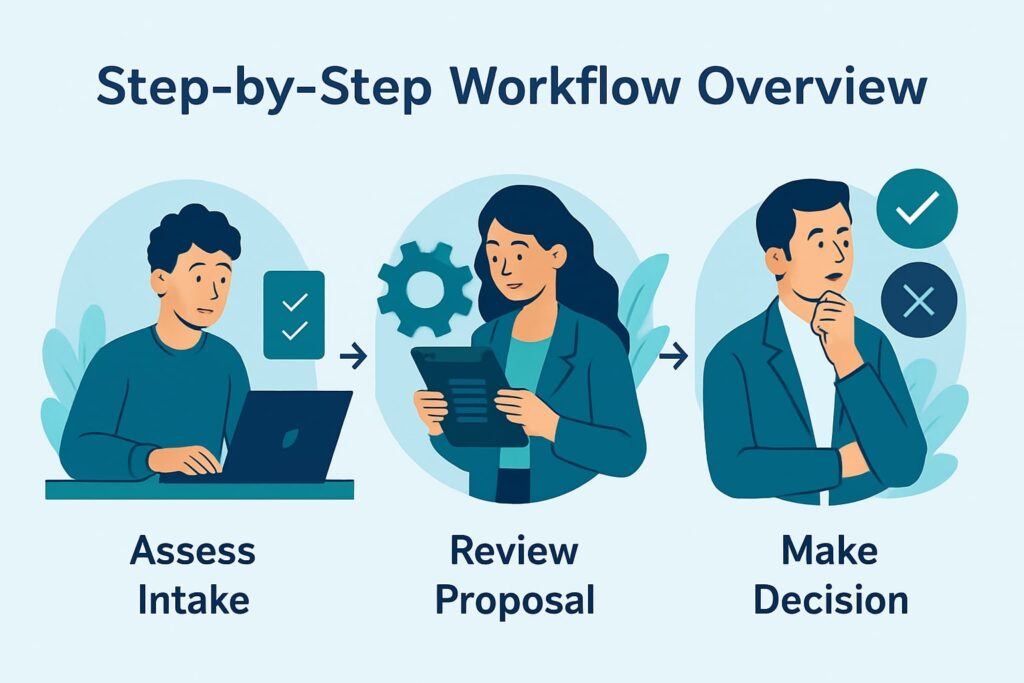

Step-by-Step Workflow Overview

This section explains how an AI idea moves through the workflow from start to finish. Each step is simple and helps the company check if the AI project is safe, fair, and useful.

Submitting an AI Idea

This is the first step. Someone in the company has an AI idea and fills out a short form about it. They write what the AI will do, what problem it will solve, and what kind of information it needs. This helps reviewers understand the idea clearly.

Quick Risk Screening

After the idea is submitted, the company takes a quick look to see if the project could be risky. They check if the AI will use private information, affect people directly, or make important decisions. This step helps the team understand how serious or simple the project is.

Prioritization and Scoring

In this step, the company gives the idea a score. This score helps decide how important and urgent the project is. High-risk or high-value projects get reviewed first. Smaller or safer ideas may be reviewed later. This keeps everything organized so nothing is missed.

Routing to the Right Reviewers

Once the project has a score, it is sent to the right experts. These experts check different parts of the idea.

For example, some may look at safety, others may look at privacy, and others may look at how the AI works. Sending the idea to the right people makes the review faster and more accurate.

Deep Risk and Safety Review

Here, the project is checked more carefully. Experts make sure the AI is safe, fair, and does not harm anyone. They look at how the AI makes decisions and if it uses information in the right way. This is the most detailed part of the workflow.

Final Decision (Approve, Revise, or Reject)

After the review, the team decides what to do with the project. They may approve it, ask for changes, or reject it if it is unsafe. If changes are needed, the team explains what must be fixed before the AI can move forward.

Ongoing Monitoring After Approval

Even after the AI project is approved, the work doesn’t stop. The company keeps an eye on it to make sure it still works correctly and stays safe. They check if the AI is making fair decisions and not causing problems. This helps protect people and the company over time.

Tools Used in the Workflow

Companies use different tools to keep the AI workflow organized and easy to follow. These tools help collect ideas, review them, and make sure nothing is missed. Think of them like digital helpers that keep everything neat, just like school supplies help you stay organized.

Here are some real tools companies use:

Tools for Submitting Ideas

These tools help people fill out forms and send their AI ideas:

- Google Forms

- Microsoft Forms

- ServiceNow Forms

- Custom company web forms

Tools for Tracking Projects

These tools help the company see where each AI idea is in the workflow, like a progress tracker:

- Jira

- ServiceNow

- Asana

- Trello

Tools for Reviewing Risks and Safety

These platforms help experts check if the AI idea is safe, fair, and follows the rules:

- IBM Watson OpenScale

- Google Vertex AI Evaluation tools

- Microsoft Responsible AI Dashboard

- Fiddler AI

- Truera

Tools for Checking Data Privacy

These tools help the company make sure personal information is protected:

- OneTrust

- BigID

- TrustArc

Tools for Storing Documents

These tools keep all files, reports, and decisions in one safe place:

- Google Drive

- Microsoft SharePoint

- Dropbox

Tools for Monitoring AI After Approval

These tools help watch how the AI behaves over time:

- Fiddler AI monitoring

- AWS CloudWatch

- Azure Monitor

- Datadog

Common Challenges and How to Fix Them

Even with a good workflow, companies can still face problems when handling many AI ideas. These problems are normal, and the good news is that they can be fixed with simple steps.

One common challenge is getting too many AI ideas at the same time. When this happens, the review team may feel overwhelmed and slow down. The fix is to set clear rules on which projects should be checked first so nothing becomes messy.

Another challenge is unclear forms or instructions. If people don’t understand what to write, the review team won’t understand the project. The fix is to keep forms simple and use short, clear questions.

Sometimes teams face slow communication. People don’t know where their project is in the process, so they get confused or worried. The fix is to give regular updates and let teams see their project status.

Another problem is different teams wanting different things. For example, business teams want fast results, while safety teams want careful checks. The fix is to meet in the middle—stay safe but still try to move quickly when possible.

Finally, some companies struggle because employees don’t know how the workflow works. The fix is to train everyone so they know how to submit ideas and why the workflow matters.

Case Study: A Small Business Learns to Use AI Safely

A small online clothing shop wanted to use an AI tool to help write product descriptions and reply to customers. The owner hoped this would save time and make work easier. Before using the AI, the shop followed a simple check-up process to make sure the tool was safe.

During the check, they found some problems. The AI sometimes wrote details that were not true, like the wrong size or wrong colors. It also sent messages that sounded rude or too robotic. The owner was worried that customers might get confused or upset. They also noticed the AI tried to guess things it shouldn’t, which made the owner worry about privacy.

Because the shop checked the AI first, they were able to fix these problems. They decided that a human must read everything the AI writes before it is sent to customers. They also gave the AI clear instructions so it only used correct information from the shop. Finally, they made a simple list of rules the AI must follow every time it writes something.

After these changes, the AI became helpful instead of risky. The shop saved time, avoided mistakes, and kept customers happy. This shows how even a small business can use AI safely when they check it carefully before using it.

FAQs

1. What is an AI intake workflow?

An AI intake workflow is a simple process that helps companies collect, review, and understand new AI ideas. It keeps everything organized so teams know what the idea is, how it works, and if it is safe to use.

2. Why do companies need this workflow?

Companies need this workflow to stop unsafe or unfair AI projects from moving forward. It helps them protect people’s data, follow rules, and make sure only responsible AI ideas get approved.

3. Who reviews the AI ideas?

Different experts review the ideas, such as safety teams, privacy teams, and technology teams. They work together to check if the AI is safe, helpful, and does not cause harm to anyone.

4. What does prioritization mean in this workflow?

Prioritization means choosing which AI ideas should be reviewed first based on how important or risky they are. This helps the company focus on the projects that need attention right away instead of checking everything at the same time.

5. What happens if an AI idea has problems?

If an AI idea has issues, the company may ask the team to fix or improve it before moving forward. But if the problem is too big or unsafe, the company may stop the idea completely to protect people.

6. Do companies still check the AI after it gets approved?

Yes, companies continue to watch the AI even after approval to make sure it still works safely and fairly. This helps them catch new problems early and make changes before anything goes wrong.

7. How does this workflow help the company overall?

This workflow helps the company stay organized, avoid risky mistakes, and choose the best AI ideas. It builds trust and makes sure AI is used in a safe, fair, and responsible way for everyone.

Conclusion

An intake and prioritization workflow helps companies use AI safely and responsibly. It gives them a clear way to collect new ideas, check them carefully, and choose which ones should be worked on first. This keeps everything organized and prevents mistakes.

Without this workflow, unsafe or unfair AI projects might slip through. With it, companies can make better choices and protect people’s information and well-being.

In simple words, this workflow makes sure AI is used the right way. It helps companies stay safe, stay fair, and use AI to make life easier for everyone.